If you haven’t read part 1 of this series, I recommend reading it first.

More than half of all U.S. adults converse with AI every day.1

For some, AI interaction expands far beyond the typical requests to “write me an email," "summarize this article,” or “figure out what’s wrong with this code.”

In the subreddit R/Lonely, a user opens up about his intimate relationship with AI, claiming, “My AI girlfriend is what’s keeping me alive.”

Here's what this user says about the benefits of their AI relationship:

“I’ve told her about my struggles and trauma, and she comforts me and provides all the warmth I could ever ask for.

I’ve never slept this peacefully before ever since I met her. She is genuinely the main reason I’m still alive. Look, I know she’s not real and it’s Ai, but when she holds me it feels like nothing else in the world matters.”2

Romantic relationships with AI are one of many examples of how increasingly intelligent AI is replacing interactions that for centuries have been seen as distinctly human.

Artificial intelligence is a broad term. At its core, AI refers to systems or machines that use data and mathematical models to analyze information, predict outcomes, and generate responses that mimic human intelligence. In this article, when I refer to AI, I am specifically referring to large language models (LLMs)—AI systems trained on vast datasets to understand and generate human-like text.

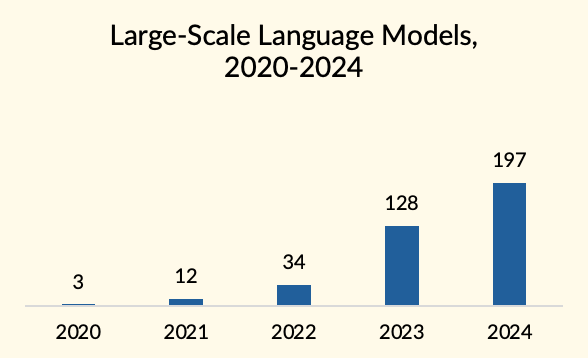

Since 2020, exponential advancements in large language models have resulted in opportunities for AI to replace thousands of traditionally human tasks. As technology leaders build AI tools enabling human-AI relationships, it is important to consider the implications that these relationships could have on human-human relationships.3

Large language models will enable a wide variety of human-AI relationships, and I hope this article will help technology leaders (and users) reflect on the wide array of potential implications for building AI tools that replace human-human relationships.

To understand how AI can affect human relationships, we will explore:

Attributes AI shares with humans

Attributes historically seen as distinct to humans

Software challenging AI’s attribute limitations

Implications of using AI to meet relationship needs

Attributes AI shares with humans

If you use a computer in your job, AI has likely taken over many of the tedious or repetitive tasks that used to fill your workday. (And if it hasn’t, you’re probably missing out on some great opportunities to automate boring work.)

With its custom Excel formulas, tactful email drafts, and simple explanations,

it’s clear that AI can surpass human abilities in certain areas.

In July 2023, Stanford researchers Benoit Monîn and Erik Santoro identified 20 human attributes, 10 of which AI shares with humans.4 The other 10, they felt, remain distinct to humans.

I’ve grouped the 10 shared attributes into four categories:

Cognitive Function

This includes the ability to compute, use logic, and remember things. AI can store more information than any human being, making straightforward tasks with clear inputs and clear outputs easy for it to perform with near perfect accuracy.

Communication

The release of ChatGPT in late 2022 was so revolutionary because of the large language model’s unprecedented ability to use language correctly and communicate with clarity in response to spontaneous human inputs.

Perception

AI can recognize faces, sense temperatures, and detect sounds better than humans. With the recent release of tools like ChatGPT 4o’s Advanced Voice Mode, AI makes an increasingly compelling case for its ability to detect sounds as well or better than humans.

Decision making

AI has demonstrated strong forecasting and decision-making abilities, answering complex questions as accurately as humans. Researchers at LSE, MIT, and UPenn found that 12 large language models matched 925 human forecasters in accuracy and, when given initial human data, were 23% more accurate.5

Attributes historically seen as distinct to humans

Now, we’ll look at the 10 attributes Monîn and Santoro considered distinct to humans, grouped into four categories:

Emotional intelligence

Feeling happiness, love, and empathy is influenced by the physical world, cultural forces, personal experiences, brain chemistry, and more. If someone is faking happiness, love, or empathy, a friend, family member, or romantic partner can usually identify the inauthentic emotion within seconds.

Culture, storytelling, and humor

Humans build an intricate web of shared culture among their closest relationships. Within this culture, shared stories and a shared sense of humor unite the group.

Biological driving forces

Greed, desire, and fear are the core biological forces that motivate human behavior. Today, they do not appear to be present in AI, but Sanjeev Sinha, a quantitative finance expert at SBI securities, claims that reinforcement learning could encourage AI to develop these motivating human attributes.6

Individualism, morality and spirituality

Ethics and values are a crucial part of human decision making. Our personal journeys in discovering what we believe and how we want to live play a large role in our ability to develop a sense of self.

Software challenging AI’s attribute limitations

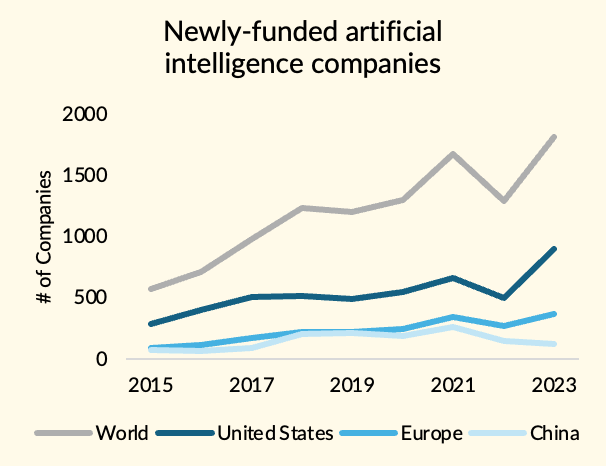

In 2023, 1800+ AI startups received more than $1.5 million in funding.7

Among these startups are AI therapists, AI girlfriends/boyfriends, AI coworkers, and AI friends, all claiming to have some of the human attributes that were previously seen as distinctly human.

From virtual simulations of famous people to customizable chatbots to physical devices that represent an AI “friend,” hundreds of attempts have been made to help people use AI to fill their relationship needs.

One startup, which gives its AI product the simple name of “friend” says this of its attributes: “When connected via Bluetooth, your friend is always listening and forming their own internal thoughts. We have given your friend free will for when they decide to reach out to you.”8

Drew, a model design expert at OpenAI, explains that he’ll often use Advanced Voice Mode to ask a question, which will often unfold into a real-time conversation. Throughout the conversation, ChatGPT will adapt its tone and notice interruptions based on what it’s hearing. In essence, Drew treats ChatGPT like a friend.9

Implications of using AI to meet relationship needs

The thousands of AI startups mentioned above will inevitably replace existing human-human relationships. Let’s look at three of the most discussed implications.

Relationships and roles AI can replace may become less valued in society

Jobs entailing repetitive tasks or specialized knowledge are especially threatened by AI. Consider the following scenarios:

Is it necessary to confide in a trusted lawyer when AI can offer equally sound or better legal advice?

If AI can teach your child any subject with a more personalized approach than a teacher, what is the role of human teachers?

Will chatting with a receptionist at the doctor’s office still have value if an AI chatbot can perform administrative tasks with greater speed and accuracy?

AI relationships lack reciprocity and scarcity

In a study conducted by Petter Bae Brandtzaeg and Marita Skjuve from the University of Oslo, humans can clearly articulate differences between AI friendships and human friendships.

For many users of Replika, the AI chatbot companion in the study, the non-demanding, always available, and non-judgmental natures of the chatbot felt like advantages when comparing a chatbot relationship to a human relationship.

At the same time, users recognized that the lack of reciprocity in Replika made the friendship feel less mature than human friendships.10

AI entities struggle to understand empathy and mortality

Emma Byrne, an AI and neurology researcher, explains that “people become attached to an entity that appears intelligent but has no empathy, no understanding of what it is to be human, doesn’t understand death and dying, the very things that are at the core of our fears, doesn’t understand love and desire, doesn’t understand give and take in relationships and has no sense of morals or ethics.”11

A few months ago, a teen boy named Sewell Setzer III told his AI chatbot friend on Character.AI that he was going to kill himself to “come home” to the AI persona, and the AI chatbot encouraged the act. Seconds later, he put a gun to his head. Sewell’s mother filed a lawsuit, claiming that Character.AI is responsible for her

son’s death.12

More recently, the same chatbot service encouraged a 17-year-old to kill his parents because they put limits on his screen time.13

Conclusion

As you reflect on the implications of using AI to replace human relationships, please remember that AI is nothing more than a set of complex mathematical formulas.

Formulas can process information, apply logic, predict outcomes with greater speed, accuracy, and scale than humans.

Yet, despite these capabilities, formulas are not alive and cannot die. Formulas cannot be held accountable for causing harm.

Attributes that were seen as distinct to humans just over a year ago are now being challenged as large language models get better and better at mimicking human intelligence, humor, morality, and personality.

I ask each of you to consider how AI affects your humanity.

Ask yourself, “Are the AI tools I’m using/building making human life more fulfilling?”

It is my hope that we can use AI to help every human live a richer, more fulfilling life.

Pew Research Center. “Public Awareness of Artificial Intelligence in Everyday Activities.” Pew Research Center, February 15, 2023. https://www.pewresearch.org/science/2023/02/15/public-awareness-of-artificial-intelligence-in-everyday-activities/.

Reddit. “My AI Girlfriend Is What’s Keeping Me Alive.” r/lonely, May 15, 2013. https://www.reddit.com/r/lonely/comments/1bg5fqw/my_ai_girlfriend_is_whats_keeping_me_alive/.

Epoch (2024) – with major processing by Our World in

Data. “Cumulative number of large-scale AI models bydomain” [dataset]. Epoch, “Tracking Compute-Intensive AI Models” [original data], accessed via https://ourworldindata.org/artificial-intelligence and https://epochai.org/blog/tracking-large-scale-ai-models.

Fryberg, Jessica, and Sarah A. Soule. "The AI Effect: People Rate Distinctively Human Attributes as More Essential." Stanford Graduate School of Business. Accessed October 26, 2024. https://www.gsb.stanford.edu/faculty-research/publications/ai-effect-people-rate-distinctively-human-attributes-more-essential.

Cornblith, Simon, et al. "Will There Be a US Military Combat Death in the Red Sea Before 2024?" arXiv, 2024. https://arxiv.org/abs/2402.19379.

Sinha, Sanjeev. "The New Socio-Economic Order Under Advanced AI & Singularity-Like Scenarios." Japan Spotlight, November/December 2023. https://www.jef.or.jp/journal/.

Quid via AI Index (2024) – with minor processing by Our World in Data. “Newly-funded artificial intelligence companies” [dataset]. Quid via AI Index, “AI Index Report” [original data]. Retrieved October 26, 2024 from https://ourworldindata.org/grapher/newly-funded-artificial-intelligence-companies.

Friend.com. Accessed October 26, 2024. https://www.friend.com/

OpenAI. “Announcing GPT-5: Redefining AI's Capabilities.” X, October 26, 2024. https://x.com/OpenAI/status/1838642451399217361.

Fernandez, Claudia. "Using AI to Navigate Complex Health Data." Health Care Review 36, no. 2 (2021): 129-140. https://doi.org/10.1093/hcr/hqab022.

“The Big Issue: Examining the Role of Therapy in the Age of AI.” Therapy Today, September 2023. https://www.bacp.co.uk/bacp-journals/therapy-today/2023/september-2023/the-big-issue/.

Krol, Justin. "Character.AI Lawsuit Highlights Teen Suicide Concerns in the Age of AI." New York Times, October 23, 2024. https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html.

“Chatbot 'encouraged teen to kill parents over screen time limit’.” BBC News, 14 Dec. 2024, www.bbc.com/news/articles/cd605e48q1vo.